In 2023, Optus rolled out AI-generated captions for their live sports streams, aiming to modernize how fans experience football. The results were... memorable, for the wrong reasons.

Words were mistranslated, context was butchered, and players' names became unrecognizable. What was supposed to be a seamless, tech-forward viewer upgrade became a case study in how not to deploy AI in production.

But the real story isn’t about one telecom company’s failed experiment.

It’s about what happens when AI is treated as a shortcut, not a system. This article unpacks why, and what engineering leaders can learn from it.

Where the AI Pipe Breaks: No Validation, No Feedback, No Recovery

What went wrong?

No Data Validation Layer: Real-time captioning requires more than just speech-to-text. It needs filters to detect and correct jargon, player names, and slang. Optus seemingly had none.

No Human-in-the-Loop (Fallback): When the AI failed, there was no escalation path or override. Once the captions derailed, they stayed off track: live, unfiltered, and embarrassingly so.

No Feedback Loop: AI improves over time, but only if it learns. The Optus deployment didn’t allow feedback from viewers or moderators. What’s worse: the same mistakes could repeat game after game.

This wasn’t just an AI failure. It was an architecture failure.

GenAI Isn’t Plug-and-Play. It’s Orchestrate-and-Evolve

We often hear, “Just add GenAI.” But intelligent systems don’t work like that.

Especially in media and entertainment, where user experience is live, emotional, and unforgiving, GenAI requires:

Real-time decision-making

Domain-specific tuning

Escalation protocols

Continuous learning loops

In short, it needs orchestration.

Modernizing your AI systems isn’t about swapping out one tool for another. It’s about building a composable, event-driven architecture where every failure is catchable and improvable.

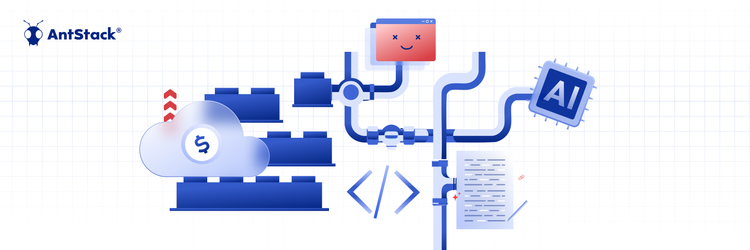

Reference Blueprint: Serverless + GenAI for Real-Time Caption Validation

Here’s what a resilient architecture could look like:

Live Audio Stream

⬇

AI Transcription Engine (e.g. Whisper, Amazon Transcribe)

⬇

Metadata Validator (Player Names, Team Terms, Language Filters)

⬇

Caption Output (UI & CDN Distribution)

⬇

Feedback Collector (User flags, moderator input)

⬇

Training Loop (Reinforcement + Fine-Tuning Models)

All orchestrated with:

AWS Lambda / Step Functions: for event-driven caption flow

Amazon Bedrock or OpenAI API: for GenAI-generated summaries, corrections

Amazon EventBridge: for rule-based alerts when confidence drops

DynamoDB / S3: to store flagged data and annotations for model retraining

This kind of serverless architecture ensures scalability and cost-efficiency without sacrificing accuracy or control.

Good AI Is Invisible, Until It Fails

The problem wasn’t just bad captions. It was a system that didn’t learn, didn’t adapt, and didn’t recover.

Incomplete modernization is like paving half a road and wondering why no one reaches the destination. GenAI without orchestration isn’t innovation. It's exposure.

If you're deploying AI in high-stakes, real-time environments like media, retail, or healthcare, remember:

Good AI isn’t about replacing humans, it’s about respecting complexity.

And that means building systems that can fail gracefully, improve continuously, and always keep the audience experience at the center.

Modernize accordingly or risk becoming the next headline for all the wrong reasons.