Scaling R&D Drug Discovery with Serverless. Faster go-to-market with Data Engineering

Ever thought caching was basically impossible in a serverless framework unless you integrate with a serverfull caching service?

Not anymore. Momento Serverless Cache is a fully serverless caching service that enables caching without a hassle. You no longer need to query the database every time you invoke your service.

Let’s take a step back and understand what caching actually is and how it helps bring the execution time and duration down in your application.

Cache

The purpose of a cache is to store data so it can be quickly provided when fetched.

Accessing data from persistent storage takes a long time, which is why we need caching. It should be stored in a more effective memory whenever data is retrieved or processed. This type of memory storage is referred to as a cache, and it serves as a high-speed data layer whose main function is to minimise the need to access slower data storage levels.

Momento Serverless Cache

Momento Serverless Cache is the world’s first truly serverless caching service. It provides instant elasticity, scale-to-zero capability, and blazing-fast performance. Gone are the days where you needed to choose, manage, and provision capacity. With Momento Serverless Cache, grab the SDK, you get an auth token, input a few lines into your code, and you’re off and running.

To learn more about how Momento Serverless Cache fits into your serverless application follow the link below: How Momento Serverless Cache fits with standard serverless applications?

If you eyed the docs you might’ve come across stating the availability only in 3 regions.

- us-west-2

- us-east-1

- ap-northeast-1

Head’s up they’ve added another AWS region to their service: Mumbai!

- ap-south-1

HooChat

HooChat is a semi-anonymous chat application where you can create groups with the people you want to chat with or “inner circle”. The application is built in a way where nobody knows who sends what messages. The more people in the group, the more fun you get out of it.

Second best thing about this is that only 100 of the past messages are shown, the rest…disappeared like how Thanos turned people into ashes. But in this case, no Avengers can help you recover those messages. So the lesson is “Live in the ‘Momento’!”.

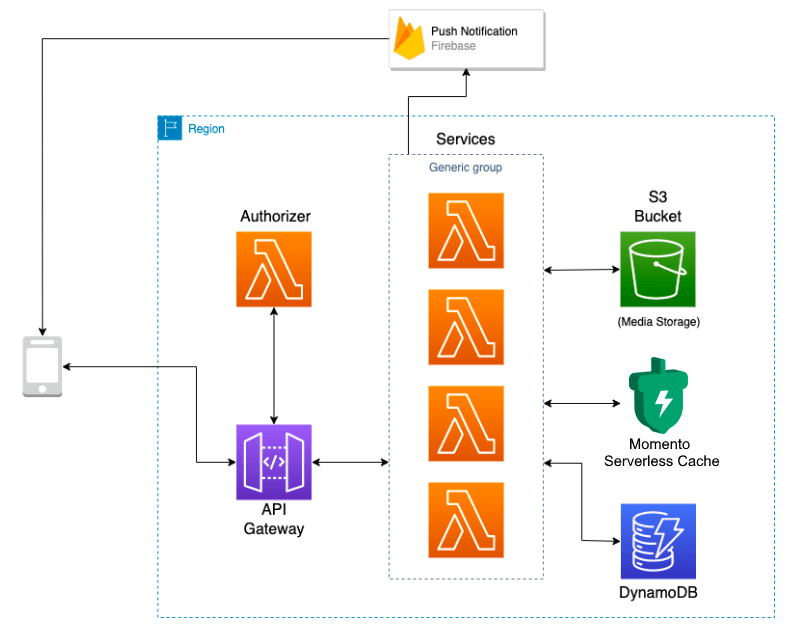

The backend for this application is solely based on serverless infrastructure. It consists mostly of AWS Lambda, DynamoDB, API Gateway, and WebSockets.

The stack is deployed in the AWS Mumbai region ie., ap-south-1.

As you can see, it is a simple infrastructure. You invoke the API and it goes through the Authorizer.

Once authorised, it invokes the Lambda function, fetches the data from DynamoDB, and returns it. A pretty straightforward architecture.

So, why caching at all? EXECUTION TIME!

That is one of the major disadvantages which could destroy the feel of the application for the user. Just imagine getting so frustrated over an app because the response time of your application is slow. We don’t want that, the user doesn’t want that, nobody wants that.

If you’re familiar with serverless you should know what a cold start is. Frustrating, isn’t it?

Cold starts can be defined as the set-up time required to get a serverless application’s environment up and running when it is invoked for the first time within a defined period.

In our case, cold starts usually take about a second, the worst case we’ve seen is about 2 seconds. The cold start in itself isn’t a problem. But there were a few API’s that would take more than a second to execute. So the execution time would add up to 3 or 4 seconds, which in this fast-paced world is like…forever.

Thanks to Momento we are able to tackle that using their caching system. After the integration and testing, we released it to the production environment and unsurprisingly, it reduced the average execution time of the problematic APIs by 40%!

The previous approach

Whenever an API call is made, the data is retrieved or queried from the database every time. It didn’t matter if the call was made again to the already provisioned Lambda function, it did the same thing.

Let’s take an example from our own use case.

Let’s say there is a group containing 50 users and a user sends a message. The way our WebSocket handler is designed to handle this is by sending the messages to each user one by one. Each user has to be queried one at a time to get their details. So that makes a total of 50 query calls for each message sent.

We did kinda reduce the execution time using batch requests, but it wasn’t good enough.

The current approach

Read-aside Caching (a.k.a “lazy loading”)

With read-aside caching, your application will first try to request the needed data from a cache. If the data is there, it will return to the caller. If not, it will request the data from the primary data source. Then, it will store the data in the cache for the next attempt and return the data to the caller.

Let’s take the same example from the previous approach.

With the caching mechanism now integrated in our application:

- Now when signing in, the user’s details are stored in the cache if not already.

- The same approach is used for our custom authorizer where if the user didn’t login after the cache integration, the details will be stored during authorization.

- The same goes to saving the group details in cache. It goes through the same steps when an initial fetch happens to get the group details of a user.

The message is sent by a user to a group of 50 users. The WebSocket handler goes through the cache to get the user’s details. If not found it queries the database, but that happens rarely.

Write-aside caching

With write-aside caching, we are using a centralised, aside cache like with read-aside caching. However, rather than lazily loading items into our cache after accessing it for the first time, we are proactively pushing data to our cache when we write it.

We made sure to apply the above and keep the data in cache up to date.

This approach led to those staggering numbers.

To sum it up, Momento Serverless Cache has helped the application achieve amazing results just by adding a few lines of code.

We thank the Momento team for the immense support and help given to us during the integration.